OK, the title might not be the best… I just would like to add more detail to content you can already find in other blogs (E.g. this nice one from Philippe Fierens http://pfierens.blogspot.com/2020/04/19c-data-guard-series-part-iii-adding.html).

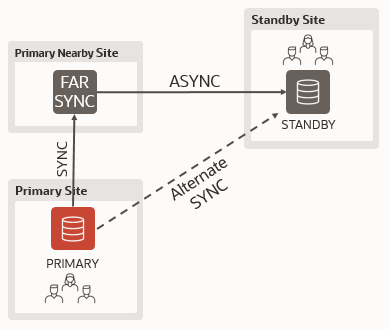

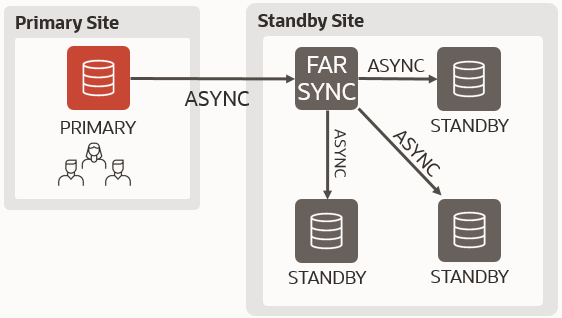

I have this Cascade Standby configuration:

DGMGRL> connect /

Connected to "TOOLCDB1_SITE1"

Connected as SYSDG.

DGMGRL> show configuration;

Configuration - toolcdb1

Protection Mode: MaxPerformance

Members:

toolcdb1_site1 - Primary database

toolcdb1_site2 - Physical standby database

toolcdx1_site2 - Physical standby database (receiving current redo)

Fast-Start Failover: Disabled

Configuration Status:

SUCCESS (status updated 42 seconds ago)Years ago I wrote this whitepaper about cascaded standbys:

https://fr.slideshare.net/ludovicocaldara/2014-603-caldarappr

While it is still relevant for non-CDBs, things have changed with Multitenant architecture.

In my config, the Oracle Database version is 19.7 and the databases are actually CDBs. No Grid Infrastructure, non-OMF datafiles.

It is important to highlight that a lot of things have changed since 12.1. And because 19c is the LTS version now, it does not make sense to try anything older.

First, I just want to make sure that my standbys are aligned.

Primary:

alter system switch logfile;

1st Standby alert log:

2020-07-07T10:20:23.370868+02:00 rfs (PID:6408): Archived Log entry 58 added for B-1044796516.T-1.S-39 ID 0xf15601c6 LAD:2 rfs (PID:6408): No SRLs available for T-1 2020-07-07T10:20:23.386410+02:00 rfs (PID:6408): Opened log for T-1.S-40 dbid 4048667172 branch 1044796516 2020-07-07T10:20:24.552766+02:00 PR00 (PID:6478): Media Recovery Log /u03/oradata/fra/TOOLCDB1_SITE2/archivelog/2020_07_07/o1_mf_1_39_hj8cs7vo_.arc PR00 (PID:6478): Media Recovery Waiting for T-1.S-40 (in transit)

2nd Standby alert log:

2020-07-07T10:20:31.051281+02:00 rfs (PID:6498): Opened log for T-1.S-39 dbid 4048667172 branch 1044796516 2020-07-07T10:20:31.150748+02:00 rfs (PID:6498): Archived Log entry 38 added for B-1044796516.T-1.S-39 ID 0xf15601c6 LAD:2 2020-07-07T10:20:31.862337+02:00 PR00 (PID:6718): Media Recovery Log /u03/oradata/fra/TOOLCDX1_SITE2/archivelog/2020_07_07/o1_mf_1_39_hj8d2h1k_.arc PR00 (PID:6718): Media Recovery Waiting for T-1.S-40

Then, I create a pluggable database (from PDB$SEED):

SQL> CREATE PLUGGABLE DATABASE LATERALUS ADMIN USER PDBADMIN IDENTIFIED BY "NfrwTgbjwq7MbPNT92cH" ROLES=(DBA)

2 FILE_NAME_CONVERT=('/pdbseed/','/LATERALUS/')

3 DEFAULT TABLESPACE USERS DATAFILE '/u02/oradata/TOOLCDB1/data/LATERALUS/USERS01.dbf' SIZE 50M AUTOEXTEND ON NEXT 50M MAXSIZE 1G;

Pluggable database created.

SQL> ALTER PLUGGABLE DATABASE LATERALUS OPEN;

Pluggable database altered.

SQL> ALTER PLUGGABLE DATABASE LATERALUS SAVE STATE;

Pluggable database altered.On the first standby I get:

2020-07-07T10:23:33.148457+02:00 rfs (PID:6408): Archived Log entry 60 added for B-1044796516.T-1.S-40 ID 0xf15601c6 LAD:2 rfs (PID:6408): No SRLs available for T-1 2020-07-07T10:23:33.184335+02:00 rfs (PID:6408): Opened log for T-1.S-41 dbid 4048667172 branch 1044796516 2020-07-07T10:23:33.887665+02:00 PR00 (PID:6478): Media Recovery Log /u03/oradata/fra/TOOLCDB1_SITE2/archivelog/2020_07_07/o1_mf_1_40_hj8d27d0_.arc Recovery created pluggable database LATERALUS Recovery copied files for tablespace SYSTEM Recovery successfully copied file /u02/oradata/TOOLCDB1/data/LATERALUS/system01.dbf from /u02/oradata/TOOLCDB1/data/pdbseed/system01.dbf LATERALUS(4):WARNING: File being created with same name as in Primary LATERALUS(4):Existing file may be overwritten LATERALUS(4):Recovery created file /u02/oradata/TOOLCDB1/data/LATERALUS/system01.dbf LATERALUS(4):Successfully added datafile 16 to media recovery LATERALUS(4):Datafile #16: '/u02/oradata/TOOLCDB1/data/LATERALUS/system01.dbf' 2020-07-07T10:23:35.846985+02:00 Recovery copied files for tablespace SYSAUX Recovery successfully copied file /u02/oradata/TOOLCDB1/data/LATERALUS/sysaux01.dbf from /u02/oradata/TOOLCDB1/data/pdbseed/sysaux01.dbf LATERALUS(4):WARNING: File being created with same name as in Primary LATERALUS(4):Existing file may be overwritten LATERALUS(4):Recovery created file /u02/oradata/TOOLCDB1/data/LATERALUS/sysaux01.dbf LATERALUS(4):Successfully added datafile 17 to media recovery LATERALUS(4):Datafile #17: '/u02/oradata/TOOLCDB1/data/LATERALUS/sysaux01.dbf' 2020-07-07T10:23:41.004383+02:00 Recovery copied files for tablespace UNDOTBS1 Recovery successfully copied file /u02/oradata/TOOLCDB1/data/LATERALUS/undotbs01.dbf from /u02/oradata/TOOLCDB1/data/pdbseed/undotbs01.dbf LATERALUS(4):WARNING: File being created with same name as in Primary LATERALUS(4):Existing file may be overwritten LATERALUS(4):Recovery created file /u02/oradata/TOOLCDB1/data/LATERALUS/undotbs01.dbf LATERALUS(4):Successfully added datafile 18 to media recovery LATERALUS(4):Datafile #18: '/u02/oradata/TOOLCDB1/data/LATERALUS/undotbs01.dbf' 2020-07-07T10:23:42.191607+02:00 (4):WARNING: File being created with same name as in Primary (4):Existing file may be overwritten (4):Recovery created file /u02/oradata/TOOLCDB1/data/LATERALUS/USERS01.dbf (4):Successfully added datafile 19 to media recovery (4):Datafile #19: '/u02/oradata/TOOLCDB1/data/LATERALUS/USERS01.dbf' PR00 (PID:6478): Media Recovery Waiting for T-1.S-41 (in transit)

On the second:

2020-07-07T10:24:31.393410+02:00 rfs (PID:6500): Opened log for T-1.S-40 dbid 4048667172 branch 1044796516 2020-07-07T10:24:31.460391+02:00 rfs (PID:6500): Archived Log entry 39 added for B-1044796516.T-1.S-40 ID 0xf15601c6 LAD:2 2020-07-07T10:24:32.360726+02:00 PR00 (PID:6718): Media Recovery Log /u03/oradata/fra/TOOLCDX1_SITE2/archivelog/2020_07_07/o1_mf_1_40_hj8d9zd7_.arc Recovery created pluggable database LATERALUS 2020-07-07T10:24:36.000250+02:00 Recovery copied files for tablespace SYSTEM Recovery successfully copied file /u02/oradata/TOOLCDX1/data/LATERALUS/system01.dbf from /u02/oradata/TOOLCDX1/data/pdbseed/system01.dbf LATERALUS(4):Recovery created file /u02/oradata/TOOLCDX1/data/LATERALUS/system01.dbf LATERALUS(4):Successfully added datafile 16 to media recovery LATERALUS(4):Datafile #16: '/u02/oradata/TOOLCDX1/data/LATERALUS/system01.dbf' 2020-07-07T10:24:40.657596+02:00 Recovery copied files for tablespace SYSAUX Recovery successfully copied file /u02/oradata/TOOLCDX1/data/LATERALUS/sysaux01.dbf from /u02/oradata/TOOLCDX1/data/pdbseed/sysaux01.dbf LATERALUS(4):Recovery created file /u02/oradata/TOOLCDX1/data/LATERALUS/sysaux01.dbf LATERALUS(4):Successfully added datafile 17 to media recovery LATERALUS(4):Datafile #17: '/u02/oradata/TOOLCDX1/data/LATERALUS/sysaux01.dbf' 2020-07-07T10:24:47.688298+02:00 Recovery copied files for tablespace UNDOTBS1 Recovery successfully copied file /u02/oradata/TOOLCDX1/data/LATERALUS/undotbs01.dbf from /u02/oradata/TOOLCDX1/data/pdbseed/undotbs01.dbf LATERALUS(4):Recovery created file /u02/oradata/TOOLCDX1/data/LATERALUS/undotbs01.dbf LATERALUS(4):Successfully added datafile 18 to media recovery LATERALUS(4):Datafile #18: '/u02/oradata/TOOLCDX1/data/LATERALUS/undotbs01.dbf' (4):Recovery created file /u02/oradata/TOOLCDX1/data/LATERALUS/USERS01.dbf (4):Successfully added datafile 19 to media recovery (4):Datafile #19: '/u02/oradata/TOOLCDX1/data/LATERALUS/USERS01.dbf' 2020-07-07T10:24:48.924510+02:00 PR00 (PID:6718): Media Recovery Waiting for T-1.S-41

So, yeah, not having OMF might get you some warnings like: WARNING: File being created with same name as in Primary

But it is good to know that the cascade standby deals well with new PDBs.

Of course, this is not of big interest as I know that the problem with Multitenant comes from CLONING PDBs from either local or remote PDBs in read-write mode.

So let’s try a relocate from another CDB:

CREATE PLUGGABLE DATABASE PNEUMA FROM PNEUMA@LUDOCDB1_PNEUMA_tempclone

RELOCATE AVAILABILITY NORMAL

file_name_convert=('/LUDOCDB1/data/PNEUMA/','/TOOLCDB1/data/PNEUMA/')

PARALLEL 2;

Pluggable database created.

SQL> ALTER PLUGGABLE DATABASE PNEUMA OPEN;

Pluggable database altered.

SQL> ALTER PLUGGABLE DATABASE PNEUMA SAVE STATE;

Pluggable database altered.This is what I get on the first standby:

2020-07-07T12:03:02.364271+02:00 Recovery created pluggable database PNEUMA PNEUMA(5):Tablespace-SYSTEM during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #20 added to control file as 'UNNAMED00020'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/system01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-SYSAUX during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #21 added to control file as 'UNNAMED00021'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/sysaux01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-UNDOTBS1 during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #22 added to control file as 'UNNAMED00022'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/undotbs01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-TEMP during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):Tablespace-USERS during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #23 added to control file as 'UNNAMED00023'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/USERS01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline.

and this is on the cascaded standby:

2020-07-07T12:03:02.368014+02:00 Recovery created pluggable database PNEUMA PNEUMA(5):Tablespace-SYSTEM during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #20 added to control file as 'UNNAMED00020'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/system01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-SYSAUX during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #21 added to control file as 'UNNAMED00021'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/sysaux01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-UNDOTBS1 during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #22 added to control file as 'UNNAMED00022'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/undotbs01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline. PNEUMA(5):Tablespace-TEMP during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):Tablespace-USERS during PDB create skipped since source is in r/w mode or this is a refresh clone PNEUMA(5):File #23 added to control file as 'UNNAMED00023'. Originally created as: PNEUMA(5):'/u02/oradata/TOOLCDB1/data/PNEUMA/USERS01.dbf' PNEUMA(5):because the pluggable database was created with nostandby PNEUMA(5):or the tablespace belonging to the pluggable database is PNEUMA(5):offline.

So absolutely the same behavior between the two levels of standby.

According to the documentation: https://docs.oracle.com/en/database/oracle/oracle-database/19/sqlrf/CREATE-PLUGGABLE-DATABASE.html#GUID-F2DBA8DD-EEA8-4BB7-A07F-78DC04DB1FFC

I quote what is specified for the parameter STANDBYS={ALL|NONE|…}:

“If you include a PDB in a standby CDB, then during standby recovery the standby CDB will search for the data files for the PDB. If the data files are not found, then standby recovery will stop and you must copy the data files to the correct location before you can restart recovery.”

“Specify ALL to include the new PDB in all standby CDBs. This is the default.”

“Specify NONE to exclude the new PDB from all standby CDBs. When a PDB is excluded from all standby CDBs, the PDB’s data files are unnamed and marked offline on all of the standby CDBs. Standby recovery will not stop if the data files for the PDB are not found on the standby. […]”

So, in order to avoid the MRP to crash, I should have included STANDBYS=NONE

But the documentation is not up to date, because in my case the PDB is skipped automatically and the recovery process DOES NOT STOP:

SQL> r 1* select process, status, sequence#, client_process from v$managed_standby PROCESS STATUS SEQUENCE# CLIENT_P --------- ------------ ---------- -------- ARCH CONNECTED 0 ARCH DGRD ALLOCATED 0 N/A DGRD ALLOCATED 0 N/A ARCH CLOSING 43 ARCH ARCH CLOSING 40 ARCH ARCH CLOSING 42 ARCH RFS IDLE 0 Archival RFS IDLE 0 UNKNOWN RFS IDLE 44 LGWR RFS IDLE 0 UNKNOWN MRP0 APPLYING_LOG 44 N/A LNS WRITING 44 LNS DGRD ALLOCATED 0 N/A 13 rows selected.

However, the recovery is marked ENABLED for the PDB on the standby, while usind STANDBYS=NONE it would have been DISABLED.

1* select name, recovery_status from v$pdbs NAME RECOVERY ------------------------------ -------- PDB$SEED ENABLED LATERALUS ENABLED PNEUMA ENABLED

So, another difference with the doc who states:

“You can enable a PDB on a standby CDB after it was excluded on that standby CDB by copying the data files to the correct location, bringing the PDB online, and marking it as enabled for recovery.”

This reflects the findings of Philippe Fierens in his blog (http://pfierens.blogspot.com/2020/04/19c-data-guard-series-part-iii-adding.html).

This behavior has been introduced probably between 12.2 and 19c, but I could not manage to find exactly when, as it is not explicitly stated in the documentation.

However, I remember well that in 12.1.0.2, the MRP process was crashing.

In my configuration, not on purpose, but interesting for this article, the first standby has the very same directory structure, while the cascaded standby has not.

In any case, there is a potentially big problem for all the customers implementing Multitenant on Data Guard:

With the old behaviour (MRP crashing), it was easy to spot when a PDB was cloned online into a primary database, because a simple dgmgrl “show configuration” whould have displayed a warning because of the increasing lag (following the MRP crash).

With the current behavior, the MRP keeps recovering and the “show configuration” displays “SUCCESS” despite there is a PDB not copied on the standby (thus not protected).

Indeed, this is what I get after the clone:

DGMGRL> show configuration;

Configuration - toolcdb1

Protection Mode: MaxPerformance

Members:

toolcdb1_site1 - Primary database

toolcdb1_site2 - Physical standby database

toolcdx1_site2 - Physical standby database (receiving current redo)

Fast-Start Failover: Disabled

Configuration Status:

SUCCESS (status updated 21 seconds ago)

DGMGRL> show database toolcdb1_site2;

Database - toolcdb1_site2

Role: PHYSICAL STANDBY

Intended State: APPLY-ON

Transport Lag: 0 seconds (computed 1 second ago)

Apply Lag: 0 seconds (computed 1 second ago)

Average Apply Rate: 8.00 KByte/s

Real Time Query: ON

Instance(s):

TOOLCDB1

Database Status:

SUCCESSI can see that the Data Guard Broker is completely silent about the missing PDB. So I might think my PDB is protected while it is not!

I actually have to add a check on the standby DBs to check if I have any missing datafiles:

1* select con_id, name, status from v$datafile where status not in ('SYSTEM','ONLINE');

CON_ID NAME STATUS

---------- ----------------------------------------------------- -------

5 /u01/app/oracle/product/db_19_7_0/dbs/UNNAMED00020 SYSOFF

5 /u01/app/oracle/product/db_19_7_0/dbs/UNNAMED00021 RECOVER

5 /u01/app/oracle/product/db_19_7_0/dbs/UNNAMED00022 RECOVER

5 /u01/app/oracle/product/db_19_7_0/dbs/UNNAMED00023 RECOVER

Although this first query seems OK to get the missing datafiles, actually the next one is the correct one to use:

SQL> select * from v$recover_file where online_status='OFFLINE';

FILE# ONLINE ONLINE_ ERROR CHANGE# TIME CON_ID

---------- ------- ------- ---------------- ---------- ------------ ----------

20 OFFLINE OFFLINE FILE MISSING 0 5

21 OFFLINE OFFLINE FILE MISSING 0 5

22 OFFLINE OFFLINE FILE MISSING 0 5

23 OFFLINE OFFLINE FILE MISSING 0 5This check should be implemented and put under monitoring (custom metrics in OEM?)

SQL> select 'ERROR: CON_ID '||con_id||' has '||count(*)||' datafiles offline!' from v$recover_file where online_status='OFFLINE' group by con_id; 'ERROR:CON_ID'||CON_ID||'HAS'||COUNT(*)||'DATAFILESOFFLINE!' -------------------------------------------------------------------------------- ERROR: CON_ID 5 has 4 datafiles offline!

The missing PDB is easy to spot once I know that I have to do it. However, for each PDB to recover (I might have many!), I have to prepare the rename of datafiles and creation of directory (do not forget I am using non-OMF here).

Now, the datafile names on the standby got changed to …/UNNAMEDnnnnn.

So I have to get the original ones from the primary database and do the same replace that db_file_name_convert would do:

set trim on col rename_file for a300 set lines 400 select 'set newname for datafile '||file#||' to '''||replace(name,'/TOOLCDB1/','/TOOLCDX1/')||''';' as rename_file from v$datafile where con_id=6;

and put this in a rman script (this will be for the second standby, the first has the same name so same PATH):

run {

set newname for datafile 20 to '/u02/oradata/TOOLCDX1/data/PNEUMA/system01.dbf';

set newname for datafile 21 to '/u02/oradata/TOOLCDX1/data/PNEUMA/sysaux01.dbf';

set newname for datafile 22 to '/u02/oradata/TOOLCDX1/data/PNEUMA/undotbs01.dbf';

set newname for datafile 23 to '/u02/oradata/TOOLCDX1/data/PNEUMA/USERS01.dbf';

restore pluggable database PNEUMA from service 'newbox01:1521/TOOLCDB1_SITE1_DGMGRL' ;

}

switch pluggable database PNEUMA to copy;

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

Starting restore at 07-JUL-2020 14:19:22

using target database control file instead of recovery catalog

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=1530 device type=DISK

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: using network backup set from service newbox01:1521/TOOLCDB1_SITE1_DGMGRL

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00020 to /u02/oradata/TOOLCDB1/data/PNEUMA/system01.dbf

channel ORA_DISK_1: restore complete, elapsed time: 00:00:03

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: using network backup set from service newbox01:1521/TOOLCDB1_SITE1_DGMGRL

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00021 to /u02/oradata/TOOLCDB1/data/PNEUMA/sysaux01.dbf

channel ORA_DISK_1: restore complete, elapsed time: 00:00:07

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: using network backup set from service newbox01:1521/TOOLCDB1_SITE1_DGMGRL

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00022 to /u02/oradata/TOOLCDB1/data/PNEUMA/undotbs01.dbf

channel ORA_DISK_1: restore complete, elapsed time: 00:00:03

channel ORA_DISK_1: starting datafile backup set restore

channel ORA_DISK_1: using network backup set from service newbox01:1521/TOOLCDB1_SITE1_DGMGRL

channel ORA_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_DISK_1: restoring datafile 00023 to /u02/oradata/TOOLCDB1/data/PNEUMA/USERS01.dbf

channel ORA_DISK_1: restore complete, elapsed time: 00:00:07

Finished restore at 07-JUL-2020 14:19:43

datafile 20 switched to datafile copy "/u02/oradata/TOOLCDB1/data/PNEUMA/system01.dbf"

datafile 21 switched to datafile copy "/u02/oradata/TOOLCDB1/data/PNEUMA/sysaux01.dbf"

datafile 22 switched to datafile copy "/u02/oradata/TOOLCDB1/data/PNEUMA/undotbs01.dbf"

datafile 23 switched to datafile copy "/u02/oradata/TOOLCDB1/data/PNEUMA/USERS01.dbf"Then, I need to stop the recovery, start it and stopping again, put the datafiles online and finally restart the recover.

These are the same steps used my Philippe in his blog post, just adapted to my taste

DGMGRL> edit database "TOOLCDB1_SITE2" set state='APPLY-OFF';

For the second part, I use this HEREDOC to online all offline datafiles:

$ sqlplus / as sysdba <<EOF

RECOVER STANDBY DATABASE UNTIL CANCEL;

CANCEL

ALTER SESSION SET CONTAINER=PNEUMA;

DECLARE

CURSOR c_fileids IS

SELECT file# FROM v\$recover_file where online_STATUS='OFFLINE';

r_fileid c_fileids%ROWTYPE;

BEGIN

OPEN c_fileids;

LOOP

FETCH c_fileids INTO r_fileid;

EXIT WHEN c_fileids%NOTFOUND;

BEGIN

EXECUTE IMMEDIATE 'ALTER DATABASE DATAFILE '||to_char(r_fileid.file#)||' ONLINE';

END;

END LOOP;

END;

/

exit

EOFand finally:

DGMGRL> edit database "TOOLCDB1_SITE2" set state='APPLY-ON';

Now, I do not have anymore any datafiles offline on the standby:

SQL> select 'ERROR: CON_ID '||con_id||' has '||count(*)||' datafiles offline!' from v$recover_file where online_status='OFFLINE' group by con_id; no rows selected

I will not publish the steps for the second standby, they are exactly the same (same output as well).

At the end, for me it is important to highlight that monitoring the OFFLINE datafiles on the standby becomes a crucial point to guarantee the health of Data Guard in Multitenant. Relying on the Broker status or “PDB recovery disabled” is not enough.

On the bright side, it is nice to see that Cascade Standby configurations do not introduce any variation, so cascaded standbys can be threated the same as “direct” standby databases.

HTH

—

Ludovico

)

)

This is where you can find me during OCW.

This is where you can find me during OCW.